EasySegment - Detecting and Segmenting Defects

EasySegment is the deep learning segmentation library of Open eVision. It contains the unsupervised segmentation tool (EUnsupervisedSegmenter class).

Deep Learning Studio

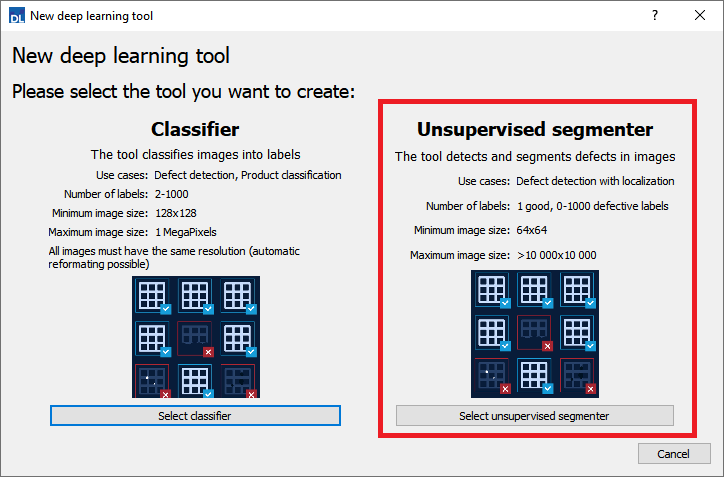

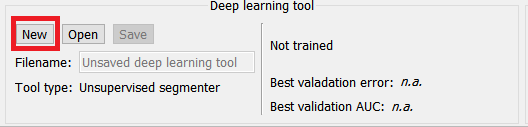

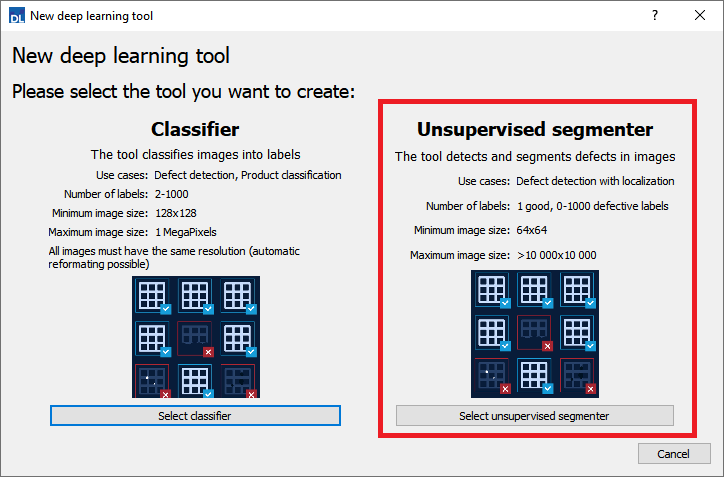

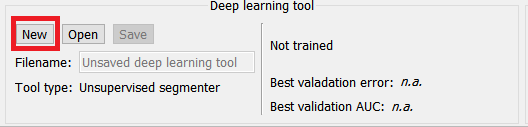

To create create an unsupervised segmentation tool in Deep Learning Studio:

|

1.

|

Start Deep Learning Studio. |

|

2.

|

Select Unsupervised segmenter in the New deep learning tool dialog.

|

The following dialog is displayed at the start of Deep Learning Studio or when you create a new deep learning tool from the toolbar.

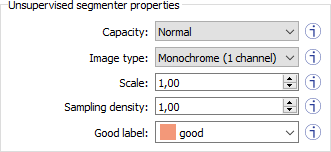

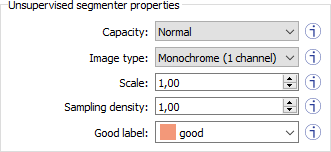

Configuration

The unsupervised segmenter tool has 5 parameters:

|

1.

|

The Capacity of the neural network (default: Normal) represents the quantity of information it is capable of learning. A larger capacity makes the tool slower. |

In the API:

|

2.

|

The Image type (default: Monochrome (1 channel)): |

In the API:

|

□

|

It indicates how much overlap there is between the image patches (100-100/SamplingDensity %). |

|

5.

|

The Good label is the name of the class that contains the good images. |

|

●

|

An iteration corresponds to training on 10 000 image patches and computing the results for each training and validation image. |

|

□

|

The training process requires a large number of iterations to obtain good results. |

|

□

|

The default number of iterations is 50. |

|

□

|

The larger the number of iterations, the longer the training is and the better the results you may obtain. |

Calling the EDeepLearningTool::Train method several times with the same training and validation dataset is equivalent to calling it once but with a larger number of iterations.

Call EDeepLearningTool::GetNumTrainedIterations to get the total number of iterations used to train the classifier.

|

●

|

The training process is asynchronous: |

|

●

|

The batch size corresponds to the number of image patches that are processed together. |

|

□

|

The training is influenced by the batch size. |

|

□

|

A large batch size increases the processing speed of a single iteration on a GPU but requires more memory. |

|

□

|

The training process is not able to learn a good model with too small batch sizes. |

|

□

|

It is common to choose powers of 2 as the batch size for performance reasons. |

Validating the results

There are 2 types of metric for the unsupervised segmentation tool:

|

□

|

Unsupervised metric only uses the results of the tool on good images. There is only one unsupervised metric: the error. |

|

□

|

Supervised metrics requires both good and defective images. The supervised metrics are the AUC (Area Under ROC Curve), the ROC curve, the accuracy, the good detection rate (also called the true negative rate), the defect detection rate (also called the true positive rate). |

The unsupervised segmentation tool computes a score for each image (see EUnsupervisedSegmenterResult::ClassificationScore). The label of a result is obtained by thresholding this score with the segmenter classification threshold (EUnsupervisedSegmenter::ClassificationThreshold). So, the supervised metrics also depends on the value of this classification threshold.

The ROC curve (Receiver Operating Characteristic) is the plot of the defect detection rate (the true positve rate) against the rate of good images classified as defective (also called the false positive rate). It is obtained by varying the classification threshold. The ROC curve shows the possible tradeoffs between the good detection rate and the defect detection rate.

The area under the ROC curve (AUC) is independent of the chosen classification threshold and represents the overall performance of the tool. Its value is between 0 (bad performance) and 1 (perfect performance).

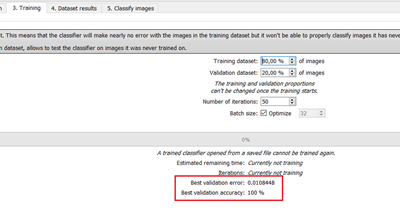

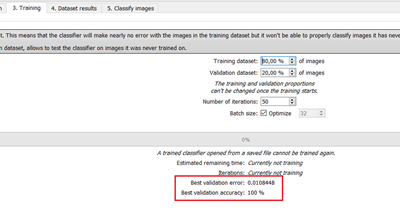

In Deep Learning Studio:

|

●

|

In the Training tab, the metrics Best validation error and Best validation AUC are computed during the training on the validation dataset without using data augmentation. The validation error, the training error and the validation AUC are plotted for each iteration. |

|

●

|

In the Dataset results tab, various metrics, the confusion matrix, a cumulative score histogram, and the ROC curve are displayed. You can also change the classification threshold directly in this tab. |

|

□

|

The cumulative score histogram shows the cumulative proportion of good (in green) and defective (in red) images with respect to the scores of the image. |

|

□

|

You can change the classification threshold in 3 ways : direct input, dragging the threshold line in the score histogram and selecting a point on the ROC curve. |

Applying the tool to new images

In Deep Learning Studio:

|

●

|

Open the Classify images tab to: |

|

□

|

Apply the segmenter to new images. |

|

□

|

Display detailed results for each image of the main dataset. |

|

●

|

Once the unsupervised segmenter is trained, call EUnsupervisedSegmenter::Apply to detect and segment defects in an Open eVision image. |

This method returns a EUnsupervisedSegmenterResult object:

The value of a defective pixel is proportional to the importance of the defect at that position.